Entrepreneurial Geekiness

PyData Conference & AHL Hackathon

Our 5th annual PyDataLondon conference will run this April 27-29th, this year we grow from 330 to 500 attendees. As before this remains a volunteer-run conference (with support from the lovely core NumFOCUS team), just as the monthly meetup is a volunteer-run event.

The Call for Proposals is open until the start of March (you have 2 weeks!) – first time speakers are keenly sought. Our mentorship programme is in full swing to help new speakers craft a good proposal, before it hits the (volunteer run) review committee. As usual we expect 2-3 submissions per speaking slot so the competition to speak at PyDataLondon will remain high. We also have a set of diversity grants to support those who might otherwise not attend the conference – don’t be afraid to apply to use a grant.

Tickets are on sale already, this year’s programme will go live towards the end of March. If you’d like a taste of what goes on at a PyDataLondon conference see my write-up from 2017and see the 2017 schedule.

The week before the conference our generous meetup hosts AHL are holding a Python Data Science Hackathon. You should definitely apply if you’re anywhere near London (I have!). They have budget to fly in some core developers – if your project hasn’t yet applied and you’re interested in being involved with a large open-source science hackathon, please do visit their site and apply. Here you have a chance to make a strong contribution to the open source tools that we all use.

Finally – if you’re interested in learning about the jobs that are going in the UK Python Data Science world, take a look at my data science jobs list. 7-10 jobs get emailed out every 2 weeks to over 900 people and people are successfully getting new jobs via this list.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Python Data Science jobs list into 2018

I’ve been building my data-science jobs list for a couple of years now. Almost 800 folk are on the list, they receive an email update once every two weeks containing around seven job ads. Many active members of PyDataLondon are on the list.

The ads are mostly London-based, a few spread into Europe. In addition to the jobs I’ve added a “book of the month” and “video of the month” recommendation along with an open source project that is after contributions from the community. If a selection of jobs and educational recommendations every couple of weeks feel like a useful addition to your inbox – join the mailchimp list here. Your email is never revealed, you’re in control, you can unsubscribe at any time.

“I’m very grateful for Ian’s job list as it enabled me to find a DS job in an interesting and meaningful domain, and furthermore connected me with likeminded folk. Strongly recommend.” – Frank Kelly, Senior Data Scientist @HAL24K

Companies who have advertised include AHL (our host for PyDataLondon), BBC, Channel 4, QBE Insurance, Willis Towers Watson, UCL and Cambridge Universities, HAL24K, Just Eat, Oxbotica, SkyScanner and many more. Roles range from junior to head-of-dept for data science and data engineering, most are permanent roles, some are contract roles.

“After placing a contract ad on this list I was contacted by a number of high quality and enthusiastic data scientists, who all proposed innovative and exciting solutions to my research problem, and were able to explain their proposals clearly to a non-specialist; the quality of responses was so high that I was presented with a real dilemma in choosing who to work with”. – Hazel Wilkinson, Cambridge University

Anyone can post to the list, PyDataLondon members get to make a first post to the list gratis (I take the time cost as a part of my usual activity of community-building in London). All posts come via me to check that they’re suitable, they go out every two weeks for three iterations. Contact me directly (ian.ozsvald at modelinsight dot io) if you’re interested in making a post.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

PyDataBudapest and “Machine Learning Libraries You’d Wish You’d Known About”

I’m back at BudapestBI and this year it has its first PyDataBudapest track. Budapest is fun! I’ve had a second iteration talking on a slightly updated “Machine Learning Libraries You’d Wish You’d Known About” (updated from PyDataCardiff two weeks back). When I was here to give an opening keynote talk two years back the conference was a bit smaller, it has grown by +100 folk since then. There’s also a stronger emphasis on open source R and Python tools. As before, the quality of the members here is high – the conversations are great!

During my talk I used my Explaining Regression Predictions Notebook to cover:

- Dask to speed up Pandas

- TPOT to automate sklearn model building

- Yellowbrick for sklearn model visualisation

- ELI5 with Permutation Importance and model explanations

- LIME for model explanations

Some audience members asked about co-linearity detection and explanation. Whilst I don’t have a good answer for identifying these relationships, I’ve added a seaborn pairplot, a correlation plot and the Pandas Profiling tool to the Notebook which help to show these effects.

Although it is complicated, I’m still pretty happy with this ELI5 plot that’s explaining feature contributions to a set of cheap-to-expensive houses from the Boston dataset:

I’m planning to do some training on these sort of topics next year, join my training list if that might be of use.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

PyConUK 2017, PyDataCardiff and “Machine Learning Libraries You’d Wish You’d Known About”

A week back I had the pleasure to talk on machine learning at PyConUK 2017 in the inaugural PyDataCardiff track. Tim Vivian-Griffiths and colleagues did a wonderful job building our second PyData conference event in the UK. The PyConUK conference just keeps getting better – 700 folk, 5 tracks, a huge kids track and lots of sub-events. Pythontastic! Cat Lamin has a lovely write-up of the main conference.

If you’re interested in PyDataCardiff then note that Tim has setup an announcements-list, join it to hear about meetup events around Cardiff and Bristol.

I spoke on the Saturday on “Machine Learning Libraries You’d Wish You’d Known About” (slides here) – this is a precis of topics that I figured out this year:

- Using Pandas multi-core with Dask

- Automating your machine learning with TPOT on sklearn

- Visualising your machine learning with YellowBrick

- Explaining why you get certain machine learning answers with ELI5 and LIME

- See my “Explaining Regression” Notebook for lots of examples with YellowBrick, ELI5, LIME and more (I used this to build my talk)

As with last year I was speaking in part to existing engineers who are ML-curious, to show ways of approaching machine learning diagnosis with an engineer’s-mindset. Last year I introduced Random Forests for engineers using a worked example. Below you’ll find for video for this year’s talk:

I’m planning to do more teaching on data science and Python in 2018 – if this might interest you, please join my training mailing list. Posts will go out rarely to announce new public and private training sessions that’ll run in the UK.

At the end of my talk I made a request of the audience, I’m going to start doing this more frequently. My request was “please send me a physical postcard if I taught you something” – I’d love to build up some evidence on my wall that these talks are useful. I received my first postcard a few days back, I’m rather stoked. Thank you Pieter! If you want to send me a postcard, just send me an email. Do please remember to thank your speakers – it is a tiny gesture that really carries weight.

Thanks to O’Reilly I also got to participate in another High Performance Python signing, this time with Steve Holden (Python in a Nutshell: A Desktop Quick Reference), Harry Percival (Test-Driven Development with Python 2e) and Nicholas Tollervy (Programming with MicroPython):

I want to say a huge thanks to everyone I met – I look forward to a bigger and better PyConUK and PyDataCardiff next year!

If you like data science and you’re in the UK, please do check-out our PyDataLondon meetup. If you’re after a job, I have a data scientist’s jobs list.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Kaggle’s Mercedes-Benz Greener Manufacturing

Kaggle are running a regression machine learning competition with Mercedes-Benz right now, it closes in a week and runs for about 6 weeks overall. I’ve managed to squeeze in 5 days to have a play (I managed about 10 days on the previous Quora competition). My goal this time was to focus on new tools that make it faster to get to ‘pretty good’ ML solutions. Specifically I wanted to play with:

- TPOT “auto scikit-learn” (but not the auto-sklearn package which is related)

- The YellowBrick sklearn visualiser

Most of the 5 days were spent either learning the above tools or making some suggestions for YellowBrick, I didn’t get as far as creative feature engineering. Currently I’m in the top 50th percentile Now the competition has finished I’m at rank 1497 (top 37th percentile) on the leaderboard using raw features, some dimensionality reduction and various estimators, with 5 days of effort.

TPOT is rather interesting – it uses a genetic algorithm approach to evolve the hyperparameters of one or more (Stacked) estimators. One interesting outcome is that TPOT was presenting good models that I’d never have used – e.g. an AdaBoostRegressor & LassoLars or GradientBoostingRegressor & ElasticNet.

TPOT works with all sklearn-compatible classifiers including XGBoost (examples) but recently there’s been a bug with n_jobs and multiple processes. Due to this the current version had XGBoost disabled, it looks now like that bug has been fixed. As a result I didn’t get to use XGBoost inside TPOT, I did play with it separately but the stacked estimators from TPOT were superior. Getting up and running with TPOT took all of 30 minutes, after that I’d leave it to run overnight on my laptop. It definitely wants lots of CPU time. It is worth noting that auto-sklearn has a similar n_jobs bug and the issue is known in sklearn.

It does occur to me that almost all of the models developed by TPOT are subsequently discarded (you can get a list of configurations and scores). There’s almost certainly value to be had in building averaged models of combinations of these, I didn’t get to experiment with this.

Having developed several different stacks of estimators my final combination involved averaging these predictions with the trustable-model provided by another Kaggler. The mean of these three pushed me up to 0.55508. My only feature engineering involved various FeatureUnions with the FunctionTransformer based on dimensionality reduction.

YellowBrick was presented at our PyDataLondon 2017 conference (write-up) this year by Rebecca (we also did a book signing). I was able to make some suggestions for improvements on the RegressionPlot and PredictionError along with sharing some notes on visualising tree-based feature importances (along with noting a demo bug in sklearn). Having more visualisation tools can only help, I hope to develop some intuition about model failures from these sorts of diagrams.

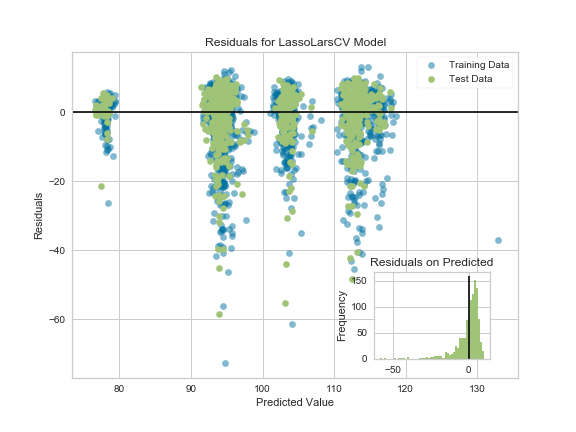

Here’s a ResidualPlot with my added inset prediction errors distribution, I think that this should be useful when comparing plots between classifiers to see how they’re failing:

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Read my book

Oreilly High Performance Python by Micha Gorelick & Ian Ozsvald AI Consulting

Co-organiser

Trending Now

1Upcoming discussion calls for Team Structure and Buidling a Backlog for data science leadsData science, pydata, Python2My first commit to PandasPython3Skinny Pandas Riding on a Rocket at PyDataGlobal 2020Data science, pydata, Python4“Making Pandas Fly” at EuroPython 2020High Performance Python Book, pydata, Python5Weekish notesData science, Python, Week notesTags

Aim Api Artificial Intelligence Blog Brighton Conferences Cookbook Demo Ebook Email Emily Face Detection Few Days Google High Performance Iphone Kyran Laptop Linux London Lt Map Natural Language Processing Nbsp Nltk Numpy Optical Character Recognition Pycon Python Python Mailing Python Tutorial Robots Running Santiago Seb Skiff Slides Startups Tweet Tweets Twitter Ubuntu Ups Vimeo Wikipedia