Entrepreneurial Geekiness

Kaggle’s Quora Question Pairs Competition

Kaggle‘s Quora Question Pairs competition has just closed, I’m pleased to say that with 10 days effort I ranked in the top 39th percentile (rank 1346 of 3396 in the private leaderboard). Having just run and spoken at PyDataLondon 2017, taught ML in Romania and worked on several client projects I only freed up time right at the end of this competition. Despite joining at the end I had immense fun – this was my first ‘proper’ Kaggle competition.

I figured a short retrospective here might be a useful reminder to myself in the future. Things that worked well:

- Use of github, Jupyter Notebooks, my research module template

- Python 3.6, scikit-learn, pandas

- RandomForests (some XGBoost but ultimately just RFs)

- Dask (great for using all cores when feature engineering with Pandas apply)

- Lots of text similarity measures, word2vec, some Part of Speech tagging

- Some light text clean-up (punctuation, whitespace, some mixed case normalisation)

- Spacy for PoS noun extraction, some NLTK

- Splitting feature generation and ML exploitation into different Notebooks

- Lots of visualisation of each distance measure by class (mainly matplotlib histograms on single features)

- Fully reproducible Notebooks with fixed seeds

- Debugging code to diagnose the most-wrong guesses from the model (pulling out features and the raw questions was often enough to get a feel for “what it missed” which lead to thoughts on new features that might help)

Things that I didn’t get around to trying due to lack of time:

- PoS named entities in Spacy, my own entity recogniser

- GloVe, wordrank, fasttext

- Clustering around topics

- Text clean-up (synonyms, weights & measures normalisation)

- Use of external corpus (e.g. Stackoverflow) for TF-IDF counts

- Dask on EC2

Things that didn’t work so well:

- Fully reproducible Notebooks (great!) to generate features with no caching of no-need-to-rebuild-yet-again features, so I did a lot of recalculating features (which really hurt in the last 2 days) – possible solution below with named columns

- Notebooks are still a PITA for debugging, attaching a console with –existing works ok until things start to crash and then it gets sticky

- Running out of 32GB of RAM several times on my laptop and having a semi-broken system whilst trying to persist partial models to disk – I should have started with an AWS deployment earlier so I could easily turn on more cores+RAM as needed

- I barely checked the Kaggle forums (only reading the Notebooks concerning the negative resampling requirement) so I missed a whole pile of tricks shared by others, some I folded in on the last day but there’s a huge pile that I missed – I think I might have snuck into the top 20% of rankings if I’d have used this public information

- Calibrating RandomForests (I’m pretty convinced I did this correctly but it didn’t improve things, I’m not sure why)

Dask definitely made parallelisation easier with only a few lines of overhead in a function beyond a normal call to apply. The caching, if using something like luigi, would add a lot of extra engineered overhead – not so useful in a rapidly iterating 10 day competition.

I think next time I’ll try using version-named columns in my DataFrames. Rather than having e.g. “unigram_distance_raw_sentences” I might add “_v0”, if that calculation process is never updated then I can just use a pre-built version of the column. This is a poor-mans caching strategy. If any dependencies existed then I guess luigi/airflow would be the next step. For now at least I think a version number will solve my most immediate time-sink in recent days.

I hope to enter another competition soon. I’m also hoping to attend the London Kaggle meetup at some point to learn from others.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

PyDataLondon 2017 Conference write-up

Several weeks back we ran our 4th PyDataLondon (2017) conference – it was another smashing success! This builds on our previous 3 years of effort (2016, 2015, 2014) building both the conference and our over-subscribed monthly meetup. We’re grateful to our host Bloomberg for providing the lovely staff, venue and catering.

Really got inspired by @genekogan’s great talk on AI & the visual arts at @pydatalondon @annabellerol

Each year we try some new ideas – this year we tried:

- 4 hour Hackathon on NLP to help our keynote speakers from FullFact to try new ideas

- “Making your first open source submission” session to help 30 people step closer to contributing to open source (thanks Katy and Nick for leading that session!)

- A scavenger hunt

- A crazy pub-quiz (James Powell is responsible for blowing quite a few minds) on Saturday evening

- A longer-running Slack channel

- New co-chair Ruby and review committee lead Linda

- More financial support for speakers and attendees to increase diversity

- Several unconference slots (filled by volunteers on the day) spoke on Bayesian statistics and other topics

- Our first Women @ PyData reception just before the conference started

- More book signing

- Fast video turnaround into YouTube

- A drone giveaway (by sponsor [and my business partner] Endava)

pros: Great selection of talks for all levels and pub quiz cons: on a weekend, pub quiz (was hard). Overall would recommend 9/10 @harpal_sahota

We’re very thankful to all our sponsors for their financial support and to all our speakers for donating their time to share their knowledge. Personally I say a big thank-you to Ruby (co-chair) and Linda (review committee lead) – I resigned both of these roles this year after 3 years and I’m very happy to have been replaced so effectively (ahem – Linda – you really have shown how much better the review committee could be run!). Ruby joined Emlyn as co-chair for the conference, I took a back-seat on both roles and supported where I could. Our volunteer team great again – thanks Agata for pulling this together.

I believe we had 20% female attendees – up from 15% or so last year. Here’s a write-up from Srjdan and another from FullFact (and one from Vincent as chair at PyDataAmsterdam earlier this year) – thanks!

#PyDataLdn thank you for organising a great conference. My first one & hope to attend more. Will recommend it to my fellow humanists! @1208DL

For this year I’ve been collaborating with two colleagues – Dr Gusztav Belteki and Giles Weaver – to automate the analysis of baby ventilator data with the NHS. I was very happy to have the 3 of us present to speak on our progress, we’ve been using RandomForests to segment time-series breath data to (mostly) correctly identify the start of baby breaths on 100Hz single-channel air-flow data. This is the precursor step to starting our automated summarisation of a baby’s breathing quality.

Slides here and video below:

This updates our talk at the January PyDataLondon meetup. This collaboration came about after I heard of Dr. Belteki’s talk at PyConUK last year, whilst I was there to introduce RandomForests to Python engineers. You’re most welcome to come and join our monthly meetup if you’d like.

Many thanks to all of our sponsors again including Bloomberg for the excellent hosting and Continuum for backing the series from the start and NumFOCUS for bringing things together behind the scenes (and for supporting lots of open source projects – that’s where the money we raise goes to!).

There are plenty of other PyData and related conferences and meetups listed on the PyData website – if you’re interested in data then you really should get along. If you don’t yet contribute back to open source (and really – you should!) then do consider getting involved as a local volunteer. These events only work because of the volunteered effort of the core organising committees and extra hands (especially new members to the community) are very welcome indeed.

I’ll also note – if you’re in London or the south-east of the UK and you want to get a job in data science you should join my data scientist jobs email list, a set of companies who attended the conference have added their jobs for the next posting. Around 600 people are on this list and around 7 jobs are posted out every 2 weeks. Your email is always kept private.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Introduction to Random Forests for Machine Learning at the London Python Meetup

Last night I had the pleasure of returning to London Python to introduce Random Forests (this builds on my PyConUK 2016 talk from September). My goal was to give a pragmatic introduction to solving a binary classification problem (Kaggle’s Titanic) using scikit-learn. The talk (slides here) covers:

- Organising your data with Pandas

- Exploratory Data Visualisation with Seaborn

- Creating a train/test set and using a Dummy Classifier

- Adding a Random Forest

- Moving towards Cross Validation for higher trust

- Ways to debug the model (from the point of view of a non-ML engineer)

- Deployment

- Code for the talk is a rendered Notebook on github

I finished with a slide on Community (are you contributing? do you fulfill your part of the social contract to give back when you consume from the ecosystem?) and another pitching PyDataLondon 2017 (May 5-7th). My colleague Vincent is over from Amsterdam – he pitched PyDataAmsterdam (April 8-9th). The Call for Proposals is open for both, get your talk ideas in quickly please.

I’m really happy to see the continued growth of the London Python meetup, this was one of the earliest meetups I ever spoke at. The organisers are looking for speakers – do get in touch with them via meetup to tell them what you’d like to talk on.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

PyDataLondon 2017 Conference Call for Proposals Now Open

This year we’ll hold our 4th PyDataLondon conference during May 5th-7th at Bloomberg (thanks Bloomberg!). Our Call for Proposals is open and will run during February (closing date to be confirmed so don’t just forget about it! – get on with making a draft submission soon).

We want talks at all levels (first timers especially welcome) from beginner to advanced, we want both regular talks and tutorials. We’ll be experimenting with the overflow room just as we did last year (possibly including Office Hours and ‘how to contribute to open source’ workshops).

Take a look at the 2016 Schedule to see the range of talks we had – engineering, machine learning, deep learning, visualisation, medical, finance, NLP, Big Data – all the usual suspects. We want all of these and more.

Personally I’m especially interested in:

- talks that cover the communication of complex data (think – bad Daily Mail Brexit graphics and how we might help people communicate complex ideas more clearly)

- encouraging collaborations between sub-groups.

- building on last year’s medical track with more medical topics

- getting journalists involved and sharing their challenges and triumphs

- and I’d love to be surprised – if you think it’ll fit – put in a submission!

The process of submitting is very easy:

- Go to the website and sign-up to make an account (you’ll need a new one even if you submitted last year)

- Post a first-draft title and abstract (just a one-liner will do if you’re pressed for time)

- Give it a day, log back in and iterate to expand on this

- If your submission is too short then the Review Committee will tell you that you don’t meet the minimum criteria, so you’ll get nagged – but only if you’ve made an attempt first!

- Iterate, integrating feedback from the Committee, to improve your proposal

- Keep your fingers crossed that you get selected

We’re also accepting Sponsorship requests, take a look on the main site and get in contact. We’ve already closed some of the options so if you’d like the price list – get in contact via the website right away.

I’d like to extend a Thank You to the new and larger Review Committee. I’ve handed over the reigns on this, many thanks to the new committee for their efforts.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

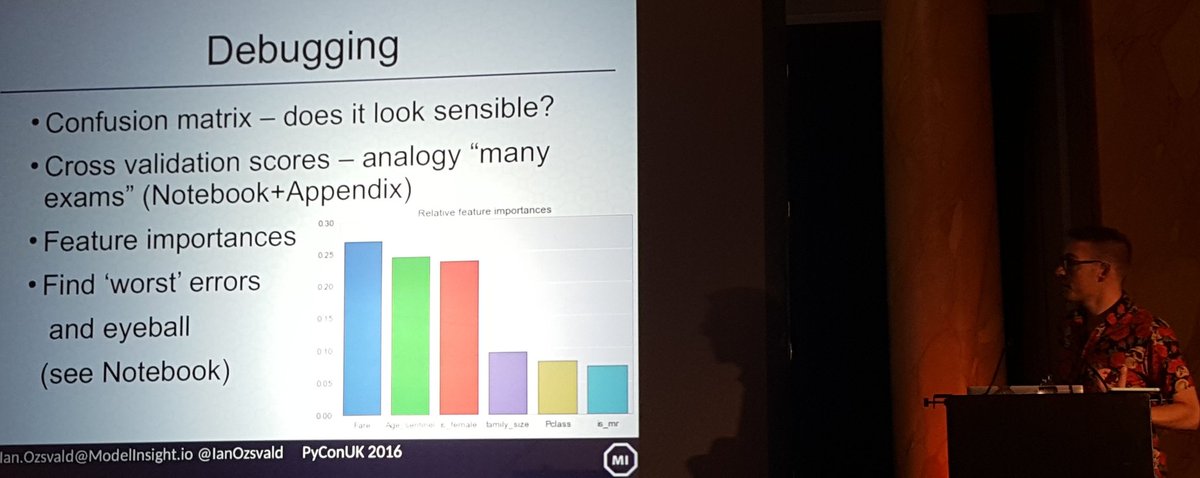

Practical ML for Engineers talk at #pyconuk last weekend

Last weekend I had the pleasure of introducing Machine Learning for Engineers (a practical walk-through, no maths) [YouTube video] at PyConUK 2016. Each year the conference grows and maintains a lovely vibe, this year it was up to 600 people! My talk covered a practical guide to a 2 class classification challenge (Kaggle’s Titanic) with scikit-learn, backed by a longer Jupyter Notebook (github) and further backed by Ezzeri’s 2 hour tutorial from PyConUK 2014.

Topics covered include:

- Going from raw data to a DataFrame (notable tip – read Katharine’s book on Data Wrangling)

- Starting with a DummyClassifier to get a baseline result (everything you do from here should give a better classification score than this!)

- Switching to a RandomForestClassifier, adding Features

- Switching from a train/test set to a cross validation methodology

- Dealing with NaN values using a sentinel value (robust for RandomForests, doesn’t require scaling, doesn’t require you to impute your own creative values)

- Diagnosing quality and mistakes using a Confusion Matrix and looking at very-wrong classifications to give you insight back to the raw feature data

- Notes on deployment

I had to cover the above in 20 minutes, obviously that was a bit of a push! I plan to cover this talk again at regional meetups, probably with 30-40 minutes. As it stands the talk (github) should lead you into the Notebook and that’ll lead you to Ezzeri’s 2 hour tutorial. This should be enough to help you start on your own 2 class classification challenge, if your data looks ‘somewhat like’ the Titanic data.

I’m generally interested in the idea of helping more engineers get into data science and machine learning. If you’re curious – I have a longer set of notes called Data Science Delivered and some vague plans to maybe write a book (maybe) – for the book join the mailing list here if you’d like to hear more (no hard sell, almost no emails at the moment, I’m still figuring out if I should do this).

You might also want to follow-up on Katharine Jarmul’s data wrangling talk and tutorial, Nick Radcliffe’s Test Driven Data Analysis (with new automated TDD-for-data tool to come in a few months), Tim Vivian-Griffiths’ SVM Diagnostics, Dr. Gusztav Belteki’s Ventilator medical talk, Geoff French’s Deep Learning tutorial and Marco Bonzanini and Miguel ‘s Intro to ML tutorial. The videos are probably in this list.

If you like the above then do think on coming to our monthly PyDataLondon data science meetups near London Bridge.

PyConUK itself has grown amazingly – the core team put in a huge amount of effort. It was very cool to see the growth of the kids sessions, the trans track, all the tutorials and the general growth in the diversity of our community’s membership. I was quite sad to leave at lunch on the Sunday – next year I plan to stay longer, this community deserves more investment. If you’ve yet to attend a PyConUK then I strongly urge you to think on submitting a talk for next year and definitely suggest that you attend.

The organisers were kind enough to let Kat and myself do a book signing, I suggest other authors think on joining us next year. Attendees love meeting authors and it is yet another activity that helps bind the community together.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Read my book

Oreilly High Performance Python by Micha Gorelick & Ian Ozsvald AI Consulting

Co-organiser

Trending Now

1Upcoming discussion calls for Team Structure and Buidling a Backlog for data science leadsData science, pydata, Python2My first commit to PandasPython3Skinny Pandas Riding on a Rocket at PyDataGlobal 2020Data science, pydata, Python4“Making Pandas Fly” at EuroPython 2020High Performance Python Book, pydata, Python5Weekish notesData science, Python, Week notesTags

Aim Api Artificial Intelligence Blog Brighton Conferences Cookbook Demo Ebook Email Emily Face Detection Few Days Google High Performance Iphone Kyran Laptop Linux London Lt Map Natural Language Processing Nbsp Nltk Numpy Optical Character Recognition Pycon Python Python Mailing Python Tutorial Robots Running Santiago Seb Skiff Slides Startups Tweet Tweets Twitter Ubuntu Ups Vimeo Wikipedia