Entrepreneurial Geekiness

Scikit-learn training in London this April 7-8th

We’re running a 2 day scikit-learn and statsmodels training course through my ModelInsight with Jeff Abrahamson (ex-Google) at the start of April (7-8th) in central London. You should join this course if you’d like to:

- confidently use scikit-learn to solve machine learning problems

- strengthen your statistical foundations so you know both what to use and why you should use it

- learn how to use statsmodels to build statistical models that represent your business challenges

- improve your matplotlib skills so you can visually communicate your findings with your team

- have lovely pub lunches both days in the company of your fellow students to build your network and talk through your work needs with smart colleagues

The early bird tickets run out Monday night, so if you want one you should go buy it now. From Tuesday we’ll continue selling at the regular price.

I’ve announced the early-bird tickets on our low-volume London Data Science Training List, if you’re interested in Python related Data Science training then you probably want to join that list (it is managed by mailchimp, you can unsubscribe at any time, we’d never share your email with others).

We’re also very keen to learn what other training you need, here’s a very simple survey (no sign-up required), tell us what you need and we’ll work to deliver the right courses:

I hope to see you along at a future PyDataLondon meetup!

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Data-Science stuff I’m doing this year

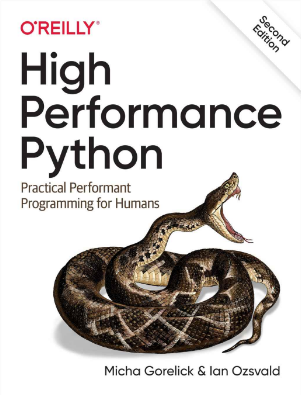

2014 was an interesting year, 2015 looks to be even richer. Last year I got to publish my High Performance Python book, help co-organise the rather successful PyDataLondon2014 conference, teach High Performance in public (slides online) and in private, keynote on The Real Unsolved Problems in Data Science and start my ModelInsight AI agency. That was a busy year (!) but deeply rewarding.

This year our consulting is branching out – we’ve already helped a new medical start-up define their data offering, I’m mentoring another data scientist (to avoid 10 years of my mistakes!) and we’re deploying new text mining IP for existing clients. We’ve got new private training this April for Machine Learning (scikit-learn) and High Performance Python (announce list) and Spark is on my radar.

Python’s role in Data Science has grown massively (I think we have 5 euro-area Python-Data-Science conferences this year) and I’m keen to continue building the London and European scenes.

I’m particularly interested in dirty data and ways we can efficiently clean it up (hence my Annotate.io lightning talk a week back). If you have problems with dirty data I’d love to chat and maybe I can share some solutions.

For PyDataLondon-the-conference we’re getting closer to fixing our date (late May/early June), join this announce list to hear when we have our key dates. In a few weeks we have our 10th monthly PyDataLondon meetup, you should join the group as I write up each event for those who can’t attend so you’ll always know what’s going on. To keep the meetup from degenerating into a shiny-suit-fest I’ve setup a separate data science jobs list, I curate it and only send relevant contract/permie job announces.

This year I hope to be at PyDataParis, PyConSweden, PyDataLondon, EuroSciPy and PyConUK – do come say hello if you’re around!

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Starting Spark 1.2 and PySpark (and ElasticSearch and PyPy)

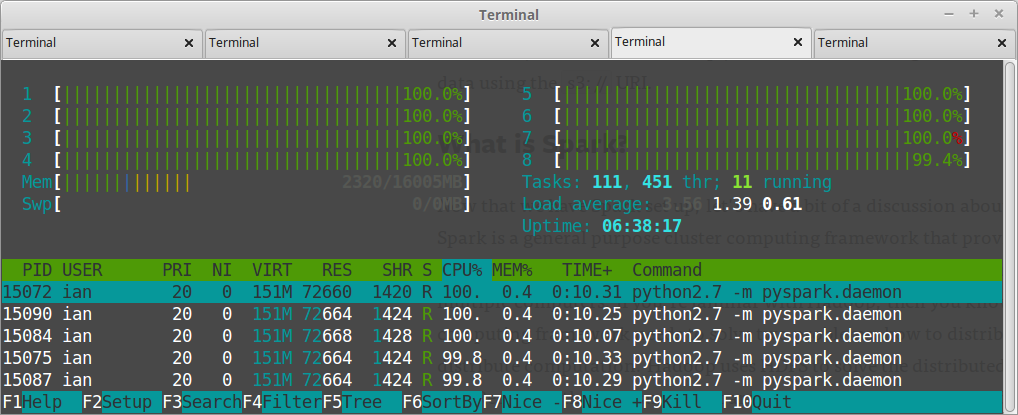

The latest PySpark (1.2) is feeling genuinely useful, late last year I had a crack at running Apache Spark 1.0 and PySpark and it felt a bit underwhelming (too much fanfare, too many bugs). The media around Spark continues to grow and e.g. today’s hackernews thread on the new DataFrame API has a lot of positive discussion and the lazily evaluated pandas-like dataframes built from a wide variety of data sources feels very powerful. Continuum have also just announced PySpark+GlusterFS.

One surprising fact is that Spark is Python 2.7 only at present, feature request 4897 is for Python 3 support (go vote!) which requires some cloud pickling to be fixed. Using the end-of-line Python release feels a bit daft. I’m using Linux Mint 17.1 which is based on Ubuntu 14.04 64bit. I’m using the pre-built spark-1.2.0-bin-hadoop2.4.tgz via their downloads page and ‘it just works’. Using my global Python 2.7.6 and additional IPython install (via apt-get):

spark-1.2.0-bin-hadoop2.4 $ IPYTHON=1 bin/pyspark ... IPython 1.2.1 -- An enhanced Interactive Python. ... Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /__ / .__/\_,_/_/ /_/\_\ version 1.2.0 /_/

Using Python version 2.7.6 (default, Mar 22 2014 22:59:56) SparkContext available as sc. >>>

Note the IPYTHON=1, without that you get a vanilla shell, with it it’ll use IPython if it is in the search path. IPython lets you interactively explore the “sc” Spark context using tab completion which really helps at the start. To run one of the included demos (e.g. wordcount) you can use the spark-submit script:

spark-1.2.0-bin-hadoop2.4/examples/src/main/python $ ../../../../bin/spark-submit wordcount.py kmeans.py # count words in kmeans.py

For my use case we were initially after sparse matrix support, sadly they’re only available for Scala/Java at present. By stepping back from my sklean/scipy sparse solution for a minute and thinking a little more map/reduce I could just as easily split the problem into number of counts and that parallelises very well in Spark (though I’d love to see sparse matrices in PySpark!).

I’m doing this with my contract-recruitment client via my ModelInsight as we automate recruitment, there’s a press release out today outlining a bit of what we do. One of the goals is to move to a more unified research+deployment approach, rather than lots of tooling in R&D which we then streamline for production, instead we hope to share similar tooling between R&D and production so deployment and different scales of data are ‘easier’.

I tried the latest PyPy 2.5 (running Python 2.7) and it ran PySpark just fine. Using PyPy 2.5 a prime-search example takes 6s vs 39s with vanilla Python 2.7, so in-memory processing using RDDs rather than numpy objects might be quick and convenient (has anyone trialled this?). To run using PyPy set PYSPARK_PYTHON:

$ PYSPARK_PYTHON=~/pypy-2.5.0-linux64/bin/pypy ./pyspark

I’m used to working with Anaconda environments and for Spark I’ve setup a Python 2.7.8 environment (“conda create -n spark27 anaconda python=2.7”) & IPython 2.2.0. Whichever Python is in the search path or is specified at the command line is used by the pyspark script.

The next challenge to solve was integration with ElasticSearch for storing outputs. The official docs are a little tough to read as a non-Java/non-Hadoop programmer and they don’t mention PySpark integration, thankfully there’s a lovely 4-part blog sequence which “just works”:

- ElasticSearch and Python (no Spark but it sets the groundwork)

- Reading & Writing ElasticSearch using PySpark

- Sparse Matrix Multiplication using PySpark

- Dense Matrix Multiplication using PySpark

To summarise the above with a trivial example, to output to ElasticSearch using a trivial local dictionary and no other data dependencies:

$ wget http://central.maven.org/maven2/org/elasticsearch/ elasticsearch-hadoop/2.1.0.Beta2/elasticsearch-hadoop-2.1.0.Beta2.jar $ ~/spark-1.2.0-bin-hadoop2.4/bin/pyspark --jars elasticsearch-hadoop-2.1.0.Beta2.jar

>>> res=sc.parallelize([1,2,3,4])

>>> res2=res.map(lambda x: ('key', {'name': str(x), 'sim':0.22}))

>>> res2.collect()

[('key', {'name': '1', 'sim': 0.22}),

('key', {'name': '2', 'sim': 0.22}),

('key', {'name': '3', 'sim': 0.22}),

('key', {'name': '4', 'sim': 0.22})]

>>>res2.saveAsNewAPIHadoopFile(path='-',

outputFormatClass="org.elasticsearch.hadoop.mr.EsOutputFormat",

keyClass="org.apache.hadoop.io.NullWritable",

valueClass="org.elasticsearch.hadoop.mr.LinkedMapWritable",

conf={"es.resource": "myindex/mytype"})

The above creates a list of 4 dictionaries and then sends them to a local ES store using “myindex” and “mytype” for each new document. Before I found the above I used this older solution which also worked just fine.

Running the local interactive session using a mock cluster was pretty easy. The docs for spark-standalone are a good start:

sbin $ ./start-master.sh

# the log (full path is reported by the script so you could `tail -f `) shows # 15/02/17 14:11:46 INFO Master: # Starting Spark master at spark://ian-Latitude-E6420:7077 # which gives the link to the browser view of the master machine which is # probably on :8080 (as shown here http://www.mccarroll.net/blog/pyspark/).

#Next start a single worker:

sbin $ ./start-slave.sh 0 spark://ian-Latitude-E6420:7077 # and the logs will show a link to another web page for each worker # (probably starting at :4040).

#Next you can start a pySpark IPython shell for local experimentation:

$ IPYTHON=1 ~/data/libraries/spark-1.2.0-bin-hadoop2.4/bin/pyspark --master spark://ian-Latitude-E6420:7077 # (and similarity you could run a spark-shell to do the same with Scala)

#Or we can run their demo code using the master node you've configured setup:

$ ~/spark-1.2.0-bin-hadoop2.4/bin/spark-submit --master spark://ian-Latitude-E6420:7077 ~/spark-1.2.0-bin-hadoop2.4/examples/src/main/python/wordcount.py README.txt

Note if you tried to run the above spark-submit (which specifies the –master to connect to) and you didn’t have a master node, you’d see log messages like:

15/02/17 14:14:25 INFO AppClient$ClientActor: Connecting to master spark://ian-Latitude-E6420:7077... 15/02/17 14:14:25 WARN AppClient$ClientActor: Could not connect to akka.tcp://sparkMaster@ian-Latitude-E6420:7077: akka.remote.InvalidAssociation: Invalid address: akka.tcp://sparkMaster@ian-Latitude-E6420:7077 15/02/17 14:14:25 WARN Remoting: Tried to associate with unreachable remote address [akka.tcp://sparkMaster@ian-Latitude-E6420:7077]. Address is now gated for 5000 ms, all messages to this address will be delivered to dead letters. Reason: Connection refused: ian-Latitude-E6420/127.0.1.1:7077

If you had a master node running but you hadn’t setup a worker node then after doing the spark-submit it’ll hang for 5+ seconds and then start to report:

15/02/17 14:16:16 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

and if you google that without thinking about the worker node then you’d come to this diagnostic page which leads down a small rabbit hole…

Stuff I’d like to know:

- How do I read easily from MongoDB using an RDD (in Hadoop format) in PySpark (do you have a link to an example?)

- Milos notes a Python Blaze solution – does this distribute to many nodes?

- Who else in London is using (Py)Spark? Maybe catch-up over a coffee?

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Lightning talk at PyDataLondon for Annotate

At this week’s PyDataLondon I did a 5 minute lightning talk on the Annotate text-cleaning service for data scientists that I made live recently. It was good to have a couple of chats after with others who are similarly bored of cleaning their text data.

The goal is to make it quick and easy to clean data so you don’t have to figure out a method yourself. Behind the scenes it uses ftfy to fix broken unicode, unidecode to remove foreign characters if needed and a mix of regular-expressions that are written on the fly depending on the data submitted.

I suspect that adding some datetime-fixers will be a next step (dealing with UK data when tools often assume that 1/3/13 is 3rd January in US-format is a pain), maybe a fact-extractor will follow.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

New Data Science training in April – Machine Learning (scikit-learn and statsmodels) and High Performance Python

In April my ModelInsight data science agency will be running two sets of 2-day training courses in London:

- Understand Statistics and Big Data using Scikit-Learn and Friends (April 7-8) including scikit-learn and statsmodels with a strong grounding in the necessary everyday statistics to use machine learning effectively

- High Performance Python (April 9-10) covering profiling (for both CPU and RAM usage), compiling (Cython & Numba), multi-core and clusters (including IPython Parallel), debugging and storage systems

The High Performance Python course is taught based on years of previous teaching and the book by the same name that I published with O’Reilly last year. The first few tickets for both courses have a 10% discount if you’re quick.

We also have a low-volume training announce list, you should join this if you’d like to be kept up to date about the training.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Read my book

Oreilly High Performance Python by Micha Gorelick & Ian Ozsvald AI Consulting

Co-organiser

Trending Now

1Leadership discussion session at PyDataLondon 2024Data science, pydata, RebelAI2What I’ve been up to since 2022pydata, Python3Upcoming discussion calls for Team Structure and Buidling a Backlog for data science leadsData science, pydata, Python4My first commit to PandasPython5Skinny Pandas Riding on a Rocket at PyDataGlobal 2020Data science, pydata, PythonTags

Aim Api Artificial Intelligence Blog Brighton Conferences Cookbook Demo Ebook Email Emily Face Detection Few Days Google High Performance Iphone Kyran Laptop Linux London Lt Map Natural Language Processing Nbsp Nltk Numpy Optical Character Recognition Pycon Python Python Mailing Python Tutorial Robots Running Santiago Seb Skiff Slides Startups Tweet Tweets Twitter Ubuntu Ups Vimeo Wikipedia