Entrepreneurial Geekiness

Second PyDataLondon Meetup a Javascript/Analystic-tastic event

This week we ran our 2nd PyDataLondon meetup (@PyDataLondon), we had 70 in the room and a rather techy set of talks. As before we hosted by Pivotal (@gopivotal) via Ian – many thanks for the beer and pizza! I took everyone to the pub after for a beer on out data science consultancy to help get everyone talking.

UPDATE As of October 2014 I’ll be teaching Data Science and High Performance Python in London, sign-up here if you’d like to be notified of our courses (no spam, just occasional notes about our plans).

As a point of admin – we’re very happy that people who were RSVPd but couldn’t make it were able to unRSVP to free up spots for those on the waitlist. This really helps with predicting the number of attendees (which we need for beer & pizza estimates) so we can get in everyone who wants to attend.

We’re now looking for speakers for our 3rd event – please get in contact via the meetup group.

First up we had Kyran Dale (my old co-founder in our ShowMeDo educational site) talking around his consulting speciality of JavaScript and Python, he covered ways to get started including ways to export Pandas data into D3 with example code, JavaScript pitfalls and linting in “Getting your Python data into the Browser“:

Next we had Laurie Clark-Michalek talking on “Day of the Ancient 2 Game Analysis using Python“, Laurie went low-level into Cython with profiling via gprof2dot (which incidently we cover in our HPC book) and gave some insight into the professional game-play and analysis world:

We then had 2 lightning talks:

- Samuel Colvin introducing Julia (I hope we’ll get a bigger Julia talk for a future event, it seems like an exciting area) – slides

- Ian Taylor (@flyingbinary) on geospatial tools for Python

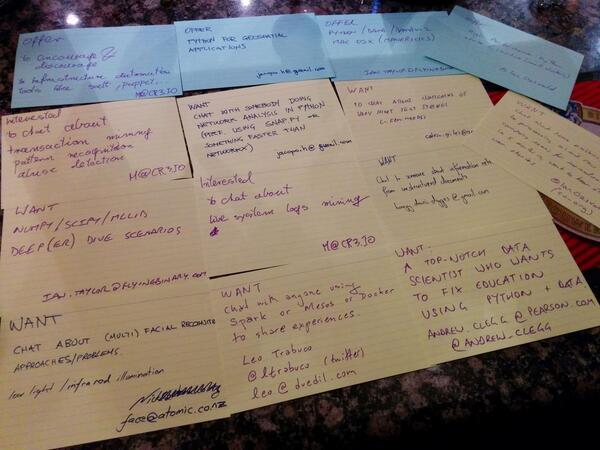

We finished with a small experiment – I brought a set of cards and people filled in a list of problems they’d like to discuss and skills they could share. Here’s the set, we’ll run this experiment next month (and iterate, having learned a little from this one). In the pub after I had a couple of nice chats from my ‘want’ (around “company name cleaning” from free-text sources):

Topics listed on the cards included Apache Spark, network analysis, numpy, facial recognition, geospatial and a job post. I expect we’ll grow this idea over the next few events.

Please get in contact via the meetup group if you’d like to speak, next month we have a talk on a new data science platform. The event will be on Tues August 5th at the same location.

I’ll be out at EuroPython & PyDataBerlin later this month, I hope to see some of you there. EuroSciPy is in Cambridge this year in August.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

PyDataLondon second meetup (July 1st)

Our second PyDataLondon meetup will be running on Tuesday July 1st at Pivotal in Shoreditch. The announce went out to the meetup group and the event was at capacity within 7 hours – if you’d like to attend future meetups please join the group (and the wait-list is open for our next event). Our speakers:

- Kyran Dale on “Getting your Python data onto a Browser” – Python+javascript from ex-academic turned Brighton-based freelance Javascript Pythonic whiz

- Laurie Clark-Michalek – “Defence of the Ancients Analysis: Using Python to provide insight into professional DOTA2 matches” – game analysis using the full range of Python tools from data munging, high performance with Cython and visualisation

We’ll also have several lightning talks, these are described on the meetup page.

We’re open to submissions for future talks and lightning talks, please send us an email via the meetup group (and we might have room for 1 more lightning talk for the upcoming pydata – get in contact if you’ve something interesting to present in 5 minutes).

Some other events might interest you – Brighton has a Data Visualisation event and recently Yves Hilpisch ran a QuantFinance training session and the slides are available. Also remember PyDataBerlin in July and EuroSciPy in Cambridge in August.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

High Performance Python manuscript submitted to O’Reilly

I’m super-happy to say that Micha and I have submitted the manuscript to O’Reilly for our High Performance Python book. Here’s the final chapter list:

- Understanding Performant Python

- Profiling to find bottlenecks (%timeit, cProfile, line_profiler, memory_profiler, heapy and more)

- Lists and Tuples (how they work under the hood)

- Dictionaries and Sets (under the hood again)

- Iterators and Generators (introducing intermediate-level Python techniques)

- Matrix and Vector Computation (numpy and scipy and Linux’s perf)

- Compiling to C (Cython, Shed Skin, Pythran, Numba, PyPy) and building C extensions

- Concurrency (getting past IO bottlenecks using Gevent, Tornado, AsyncIO)

- The multiprocessing module (pools, IPC and locking)

- Clusters and Job Queues (IPython, ParallelPython, NSQ)

- Using less RAM (ways to store text with far less RAM, probabilistic counting)

- Lessons from the field (stories from experienced developers on all these topics)

August is still the expected publication date, a soon-to-follow Early Release will have all the chapters included. Next up I’ll be teaching on some of this in August at EuroSciPy in Cambridge.

Some related (but not covered in the book) bit of High Performance Python news:

- PyPy.js is now faster than CPython (but not as fast as PyPy) – crazy and rather cutting effort to get Python code running on a javascript engine through the RPython PyPy toolchain

- Micropython runs in tiny memory environments, it aims to runs on embedded devices (e.g. ARM boards) with low RAM where CPython couldn’t possibly run, it is pretty advanced and lets us use Python code in a new class of environment

- cytools offers Cython compiled versions of the pytoolz extended iterator objects, running faster than pytoolz and via iterators probably using significantly less RAM than when using standard Python containers

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Flask + mod_uwsgi + Apache + Continuum’s Anaconda

I’ve spent the morning figuring out how to use Flask through Anaconda with Apache and uWSGI on an Amazon EC2 machine, side-stepping the system’s default Python. I’ll log the main steps in, I found lots of hints on the web but nothing that tied it all together for someone like me who lacks Apache config experience. The reason for deploying using Anaconda is to keep a consistent environment against our dev machines.

First it is worth noting that mod_wsgi and mod_uwsgi (this is what I’m using) are different things, Flask’s Apache instructions talk about mod_wsgi and describes mod_uwsgi for nginx. Continuum’s Anaconda forum had a hint but not a worked solution.

I’ve used mod_wsgi before with a native (non-Anaconda) Python installation (plus a virtualenv of numpy, scipy etc), I wanted to do something similar using an Anaconda install of an internal recommender system for a client. The following summarises my working notes, please add a comment if you can improve any steps.

- Setup an Ubuntu 12.04 AMI on EC2

-

source activate production # activate the Anaconda environment

-

(I'm assuming you've setup an environment and

-

put your src onto this machine)

-

conda install -c https://conda.binstar.org/travis uwsgi

-

# install uwsgi 2.0.2 into your Anaconda environment

-

using binstar (other, newer versions might be available)

-

uwsgi --http :9090 --uwsgi-socket localhost:56708

-

--wsgi-file <path>/server.wsgi

-

# run uwsgi locally on a specified TCP/IP port

-

curl localhost:9090 # calls localhost:9090/ to test

-

your Flask app is responding via uwsgi

If you get uwsgi running locally and you can talk to it via curl then you’ve got an installed uwsgi gateway running with Anaconda – that’s the less-discussed-on-the-web part done.

Now setup Apache:

-

sudo apt-get install lamp-server^

-

# Install the LAMP stack

-

sudo a2dissite 000-default

-

# disable the default Apache app

-

# I believe the following is sensible but if there's

-

an easier or better way to talk to uwsgi, please

-

leave me a comment (should I prefer unix sockets maybe?)

-

sudo apt-get install libapache2-mod-uwsgi # install mod_uwsgi

-

sudo a2enmod uwsgi # activate mod_uwsgi in Apache

-

# create myserver.conf (see below) to configure Apache

-

sudo a2ensite myserver.conf

-

# enable your server configuration in Apache

-

service apache2 reload # somewhere around now you'll have

-

to reload Apache so it sees the new configurations, you

-

might have had to do it earlier

My server.wsgi lives in with my source (outside of the Apache folders), as noted in the Flask wsgi page it contains:

import sys sys.path.insert(0, "<path>/mysource") from server import app as application

Note that it doesn’t need the virtualenv hack as we’re not using virtualenv, you’ve already got uwsgi running with Anaconda’s Python (rather than the system’s default Python).

The Apache configuration lives in /etc/apache2/sites-available/myserver.conf and it has only the following lines (credit: Django uwsgi doc), note the specified port is the same as we used when running uwsgi:

<VirtualHost *:80>

<Location />

SetHandler uwsgi-handler

uWSGISocket 127.0.0.1:56708

</Location>

</VirtualHost>

Once Apache is running, if you stop your uwsgi process then you’ll get 502 Bad Gateway errors, if you restart your uwsgi process then your server will respond again. There’s no need to restart Apache when you restart your uwsgi process.

For debugging note that /etc/apache2/mods-available/ will contain uwsgi.load once mod_uwsgi is installed. The uwsgi binary lives in your Anaconda environment (for me it is ~/anaconda/envs/production/bin/uwsgi), it’ll only be active once you’ve activated this environment. Useful(ish) error messages should appear in /var/log/apache2/error.log. uWSGI has best practices and a FAQ.

Having made this run at the command line it now needs to be automated. I’m using Circus. I’ve installed this via the system Python (not via Anaconda) as I wanted to treat it as being outside of the Anaconda environment (just as Upstart, cron etc would be outside of this environment), this means I needed a bit of tweaking. Specifically PATH must be configured to point at Anaconda and a fully qualified path to uwsgi must be provided:

#circus.ini [circus] check_delay = 5 endpoint = tcp://127.0.0.1:5555 pubsub_endpoint = tcp://127.0.0.1:5556 [env:myserver] PATH=/home/ubuntu/anaconda/bin:$PATH [watcher:myserver] cmd = <path_anaconda>/envs/production/bin/uwsgi args = --http :9090 --uwsgi-socket localhost:56708 --wsgi-file <config_dir>/server.wsgi --chdir <working_dir> warmup_delay = 0 numprocesses = 1

This can be run with “circusd <config>/circus.ini –log-level debug” which prints out a lot of debug info to the console, remember to run this with a login shell and not in the Anaconda environment if you’ve installed it without using Anaconda.

Once this works it can be configured for control by the system, I’m using systemd on Ubuntu via the Circus Deployment instructions with a /etc/init/circus.conf script, configured to its own directory.

If you know that mod_wsgi would have been a better choice then please let me know (though dev for the project looks very slow [it says “it is resting”]), I’m experimenting with mod_uwsgi (it seems to be more actively developed) but this is a foreign area for me, I’d be happy to learn of better ways to crack this nut. A quick glance suggests that both support Python 3.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

7 chapters of “High Performance Python” now live

O’Reilly have just released another update to our High Performance Python book, in total we’ve now released the following:

- Understanding Performance Python

- Profiling to find bottlenecks (%timeit, cProfile, line_profiler, memory_profiler, heapy)

- Lists and Tuples

- Dictionaries and Sets

- Iterators and Generators

- Matrix and Vector Computation (numpy and scipy)

- Compiling to C (Cython, Shed Skin, Pythran, Numba, PyPy)

We’re in the final edit cycle, we have a lot of edits to commit to the main chapters over the next week for the next Early Release. All going well the book will be published in August.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Read my book

Oreilly High Performance Python by Micha Gorelick & Ian Ozsvald AI Consulting

Co-organiser

Trending Now

1Leadership discussion session at PyDataLondon 2024Data science, pydata, RebelAI2What I’ve been up to since 2022pydata, Python3Upcoming discussion calls for Team Structure and Buidling a Backlog for data science leadsData science, pydata, Python4My first commit to PandasPython5Skinny Pandas Riding on a Rocket at PyDataGlobal 2020Data science, pydata, PythonTags

Aim Api Artificial Intelligence Blog Brighton Conferences Cookbook Demo Ebook Email Emily Face Detection Few Days Google High Performance Iphone Kyran Laptop Linux London Lt Map Natural Language Processing Nbsp Nltk Numpy Optical Character Recognition Pycon Python Python Mailing Python Tutorial Robots Running Santiago Seb Skiff Slides Startups Tweet Tweets Twitter Ubuntu Ups Vimeo Wikipedia