Entrepreneurial Geekiness

Headroid1 – a face tracking robot head

The video below introduces Headroid1, this face-tracking robot will grow into a larger system that can follow people’s faces, detect emotions and react to engage with the visitor.

The above system uses openCV’s face detection (using the Python bindings and facedetect.py) to figure out whether the face is in the centre of the screen, if the camera needs to move it then talks via pySerial to BotBuilder‘s ServoBoard to pan or tilt the camera until the face is back in the centre of the screen.

Update – see Building A Face Tracking Robot In An Afternoon for full details to build your own Headroid1.

Headroid is pretty good at tracking faces as long as there’s no glare, he can see people from 1 foot up to about 8 feet from the camera. He moves at different speeds depending on your distance from the centre of the screen and stops with a stable picture when you’re back at the centre of his attention. The smile/frown detector which will follow will add another layer of behaviour.

Heather (founder of Silicon Beach Training) used Headroid1 (called Robocam in her video) at Likemind coffee this morning, she’s written up the event:

Andy White (@doctorpod) also did a quick 2 minute MP3 interview with me via audioboo.

Later over coffee Danny Hope and I discussed (with Headroid looking on) some ideas for tracking people, watching for attention, monitoring for frustration and concentration and generally playing with ways people might interact with this little chap:

The above was built in collaboration with BuildBrighton, there’s some discussion about it in this thread. The camera is a Philips SPC900NC which works using macam on my Mac (and runs on Linux and Win too). The ServoBoard has a super-simple interface – you send it commands like ’90a’ (turn servo A to 90 degress) as text and ‘it just works’ – it makes interactive testing a doddle.

Update – the blog for the A.I. Cookbook is now active, more A.I. and robot updates will occur there.

Reference material:

The following should help you move forwards:

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Extracting keyword text from screencasts with OCR

Last week I played with the Optical Character Recognition system tesseract applied to video data. The goal – extract keywords from the video frames so Google has useful text to index.

I chose to work with ShowMeDo‘s screencasts as many show programming in action – there’s great keyword information in these videos that can be exposed for Google to crawl. This builds on my recent OCR for plaques project.

I’ll blog in the future about the full system, this is a quick how-to if you want to try the system yourself.

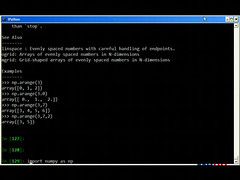

First – get a video. I downloaded video 10370000.flv from Introducing numpy arrays (part 1 of 11).

Next – extract a frame. Using ffmpeg I extracted a frame at 240 seconds as a JPG:

ffmpeg -i 10370000.flv -y -f image2 -ss 240 -sameq -t 0.001 10370000_240.jpg

Tesseract needs TIF input files (not JPGs) so I used GIMP to convert to TIF.

Finally I applied tesseract to extract text:

tesseract 10370000_30.tif 10370000_30 -l eng

This yields:

than rstupr . See Also linspate : Evenly spaced numbers with careful handling of endpoints. grid: Arrays of evenly spared numbers in Nrdxmensmns grid: Grid—shaped arrays of evenly spaced numbers in Nwiunensxnns Examples >>> np.arange(3) ¤rr¤y([¤. 1. 2]) >>> np4arange(3.B) array([ B., 1., 2.]) >>> np.arange(3,7) array([3, A, S, 6]) >>> np.arange(3,7,?) ·=rr··¤y<[3. 5]) III Ill

Obviously there’s some garbage in the above but there are also a lot of useful keywords!

To clean up the extraction I’ll be experimenting with:

- Using the original AVI video rather than the FLV (which contains compression artefacts which reduce the visual quality), the FLV is also watermarked with ShowMeDo’s logo which hurts some images

- Cleaning the image – perhaps applying some thresholding or highlighting to make the text stand out, possibly the green text is causing a problem in this image

- Training tesseract to read the terminal fonts commonly found in ShowMeDo videos

I tried four images for this test, in all cases useful text was extracted. I suspect that by rejecting short words (less than four characters) and using words that appear at least twice in the video then I’ll have a clean set of useful keywords.

Update – the blog for the A.I. Cookbook is now active, more A.I. and robot updates will occur there.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

“Artificial Intelligence in the Real World” lecture at Sussex University 2010

I’m chuffed to have delivered the second version of my “A.I. in the real world” lecture (I gave it last May too) to 2nd year undergraduates at Sussex University this afternoon.

The slides are below, I cover:

- A.I. that I’ve seen and have been involved with in the last 10 years

- Some project ideas for undergraduates

- How to start a new tech business/project in A.I.

In the talk I also showed or talked about:

- A YouTube video of the DARPA Grand Challenge (down below)

- The Internet Movie Firearms Database when talking about searching for “movie on a beach with bangalores” which resolves to Saving Private Ryan…if someone writes this search engine

- Optical Character Recognition using the open source Tesseract

- pyCUDA and high performance computing (which I’m teaching at BrightonPy soon)

- My plan for an Artificial Intelligence Handbook (collaborative project – all welcome!)

- BuildBrighton – the local HackerSpace

- £5 App event – celebrating people who build need stuff

- FlashBrighton – lots of interesting talks

- LikeMind coffees – lots of interesting people

Artificial Intelligence in the Real World May 2010 Sussex University Guest Lecture

Here’s the YouTube video showing the Grand Challenge entries:

Update – the blog for the A.I. Cookbook is now active, more A.I. and robot updates will occur there.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Tesseract optical character recognition to read plaques

The tesseract engine (wikipedia) is a very capable OCR package, I’m playing with it after a thought for my AI Handbook plan. OCR is a pretty interesting subject, it drove a lot of early computer research as it was used to automate paper filing for banks and companies like Readers Digest. This TesseractOSCON paper gives a nice summary of how it works.

Update – almost 100% perfect recognition results are possible, see OCR Webservice work-in-progress for an update.

As it states on the website:

“The Tesseract OCR engine was one of the top 3 engines in the 1995 UNLV Accuracy test. Between 1995 and 2006 it had little work done on it, but it is probably one of the most accurate open source OCR engines available. The source code will read a binary, grey or color image and output text. A tiff reader is built in that will read uncompressed TIFF images, or libtiff can be added to read compressed images.”

I wanted to see how well it might extract the text from English Heritage plaques for the openplaques.org project. At the weekend I took this photo:

On the command line I ran:

tesseract SwissGardensPlaque.tif output.txt -l eng

and the result in output.txt was:

"VICTORIAN 1=e—1.EAsuRE masonrr _ _ THE SWISS GARDENS FOUNDED HERE IN 1838 E x BY JAMES BRITTON BALLEY SHIPBUILDER 9/ 1789 — 1863 N x égpis COQQE USSEX c0UN“"

Obviously the result isn’t brilliant but all the major text is present – this is without any training or preparation.

As a pre-processing test I flattened the image to a bitdepth of 1 (black and white), rotated the image a little to make the text straight and cropped some of the unnecessary parts of the image. The recognition improves a small amount, the speckling and the bent text are still a problem:

"W,R¤¤AM a ‘ A VICTORlAN i ‘ P-LEASURE RESORT SWISS GARDENS FOUNDED mama IN 1838 BY JAMES\ BRITTON BALLEY SHIPBUILDER 1789 - 1863 lb (9*, 6*7 S 006 USSEX couw 1"

I tried a few others plaques and the results were similar – generally all the pertinent text came through along with some noise.

On my MacBook it took 20 minutes to get started. I downloaded:

- tesseract-2.04.tar.gz

- tesseract-2.00.eng.tar.gz

As noted in the README I extracted the .eng data files into tessdata/, ran ‘./configure’, ‘make’, ‘sudo make install’ and that was all.

For future research there are other OCR systems with SDKs. The algorithms used for number plate recognition might be an interesting place to start further research.

Update – the blog for the A.I. Cookbook is now active, more A.I. and robot updates will occur there.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

New book/wiki – a practical artificial intelligence ‘cookbook’

Having almost completed The Screencasting Handbook I’m now thinking about my next project. I’ve been involved in the field of artificial intelligence since my first computer (a Commodore 64 back in the 80s) and I’ve continued to be paid to work in this area since the end of the 90s.

Update – as mentioned below the new project has started – read more at the A.I. Cookbook blog.

My goal now is to write a collaborative book (probably using a wiki) that takes a very practical look at the use of artificial intelligence in web-apps and desktop software. The big goal would be to teach you how to effectively use A.I. techniques in your job and for your own research. Here’s a few of the topics that could be covered:

- Using open source and commercial tools for face, object and speech recognition

- Playing with open source and commercial text to speech tools (e.g. the open source festival)

- Automated control of driving and flight simulators with artificial brains

- Building chatbot systems using tools like AIML, CHAT-L and natural language parsing kits

- Using natural language parsing to add some smarts to apps – maybe for reading and identifying interesting people in Twitter and on blogs

- Building useful demos around techniques like neural networks and evolutionary optimisation

- Adding brains to real robots with some Arduinos and open source robot kits

- Teaching myself machine learning and pattern matching (an area I’m weak on) along with useful libraries like Bayesian classification (Python’s reverend is great for this)

- Parallel computation engines like Amazon’s EC2, libcloud and GPU programming with CUDA and OpenCL

- Using Python and C++ for prototyping (along with Matlab and some other relevant languages)

- and a whole bunch of other stuff – your input is very welcome

I’ve noticed that there are an awful lot of open source (and commercial) toolkits but very few practical guides to using them in your own software. What I want to encourage are some fun projects that’ll run for a month or two, here are some ideas:

- Using optical character recognition engines to augment projects like OpenPlaques.org with free meta data from real-world photos (for a start see my Tesseract OCR post)

- Collaborating in real-world competitions like the Simulated Car Racing Competition 2010: Demolition Derby (they’re running a simulated project that’s not unlike the DARPA Grand Challenge)

- Applying face recognition algorithms to flickr photos so we can track who is posting images of us for identity management

- Creating a Twitter bot that responds to questions and maybe can have a chat (checking the weather should be easy, some memory could be useful – using Twitter as an interface to tools like OCR for plaques might be fun too) – I have one of these in development right now

- Build a Zork-solving bot (using NLP and tools like ConceptNet) that can play interactive fiction, build maps and try to solve puzzles

- Using evolutionary optimisation techniques like genetic algorithms on the traveling salesman problem

- Building Braitenberg-like brains for open source robot kits (like those by Steve at BotBuilder)

- Crate a QR code and Bar Code reader, tied to a camera

LinkedIn has my history – here’s my work site (please forgive it being a little…simple) Mor Consulting Ltd, I’m the AI Consultant for Qtara.com and I used to be the Senior Programmer for the UK R&D arm of MasaGroup.net/BlueKaizen.com.

I don’t have a definite timeline for the book, I’ll be making that up with you and everyone else once I’ve finished The Screencasting Handbook (end of April).

The Artificial Intelligence Cookbook project has started – the blog is currently active (along with the @aicookbook Twitter account). There is a mailing list to join for occasional updates – email AICookbook@Aweber.com to join.

It will be a commercial project and I will be looking to make it very relevant to however you’re using AI. Sign-up and you’ll get some notifications from me as the project develops.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

Read my book

Oreilly High Performance Python by Micha Gorelick & Ian Ozsvald AI Consulting

Co-organiser

Trending Now

1Leadership discussion session at PyDataLondon 2024Data science, pydata, RebelAI2What I’ve been up to since 2022pydata, Python3Upcoming discussion calls for Team Structure and Buidling a Backlog for data science leadsData science, pydata, Python4My first commit to PandasPython5Skinny Pandas Riding on a Rocket at PyDataGlobal 2020Data science, pydata, PythonTags

Aim Api Artificial Intelligence Blog Brighton Conferences Cookbook Demo Ebook Email Emily Face Detection Few Days Google High Performance Iphone Kyran Laptop Linux London Lt Map Natural Language Processing Nbsp Nltk Numpy Optical Character Recognition Pycon Python Python Mailing Python Tutorial Robots Running Santiago Seb Skiff Slides Startups Tweet Tweets Twitter Ubuntu Ups Vimeo Wikipedia