I had the opportunity to play with a Kinect over the weekend, I wanted to test out depth mapping using the built in infra red cameras. Using a structured light approach is different to the stereopsis approach I was looking at with Kyran recently.

Using the open source drivers for Ubuntu I quickly got the basic depth sensing demo running. Here’s Emily in our living room:

According to the wikipedia entry the Kinect has a depth resolution of approximately 1.3mm, below is a shot of my laptop with a few objects in the background and you can just about make out the bevelling around the edge of the keys:

I’m particularly interested in using Python’s numpy and the openCV bindings for depth measurement, these simple instructions don’t work for me (grr!) as I can’t get the freenect library on Ubuntu 11.10 to recognise the Kinect (even though it obviously runs fine using the OpenNI drivers!). I tried using Ubuntu 12.04 in a VirtualBox but apparently VM solutions don’t work with the Kinect due to some USB limitations 🙁

However using the PyOpenNI drivers works fine on Ubuntu 11.04. libfreenect continues to insist that I have no Kinect but the PyOpenNI drivers work just fine.

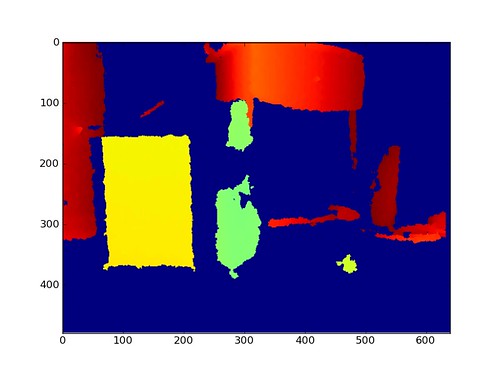

Here I have 3 shots showing a bottle, box of granola, table further back and some furniture behind. I progressively reduce the depth so we only see the foreground bottle & granola box by the last image. These shots are taken using PyOpenNI, numpy and matplotlib:

There’s a cool video for using dual Kinects together to remove some of the blind spots and to build a better 3D model:

It looks like the Kinect 2 is a year or two away yet.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

2 Comments