I’ve just uploaded a new Mandelbrot.py demo for pyCUDA, it adds a new calculation routine that straddles the numpy (C based math) and the pure-CUDA implementations. In total there are 4 variants to choose from. The speed differences are huge!

Update – this Reddit thread has more details including real-world timings for two client problems (showing 10-3,677* speed-ups over a C task).

Update – I’ve written a High Performance Python tutorial (July 2011, 55 pages) which covers pyCUDA and other technologies, you might find it useful.

This post builds upon my earlier pyCUDA on Windows and Mac for super-fast Python math using CUDA.

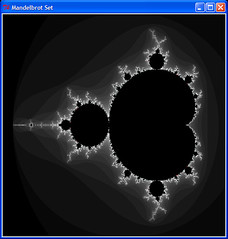

You’ll need CUDA 3.1 and pyCUDA installed with a compatible NVIDIA graphics card. This version of the Mandelbrot code forces single precision math – this means it’ll work on all CUDA cards (even the older ones – full list). It runs on my MacBook (Leopard) and Windows, the Windows machines use a 9800 GT and GTX 480. Here’s what it generates:

The big-beast graphics card for my physics client is a GTX 480 – this is NVIDIA’s top of the line consumer card (costing £420GBP in the UK a few weeks back). It is huge – it covers two slots, uses one PCIe 2.0×16 slot and has a requirement for 300-400W of power (I’m using a 750W PSU to be safe on a Gigabyte GA H55M S2H motherboard):

The mandelbrot.py demo has four options (e.g. ‘python mandelbrot.py gpu’):

- ‘gpu’ is a pure CUDA solution on the GPU

- ‘gpuarray’ uses a numpy-like CUDA wrapper in Python on the GPU

- ‘numpy’ is a pure Numpy (C-based) solution on the CPU

- ‘python’ is a pure Python solution on the CPU with numpy arrays

The default problem is a 1000*1000 Mandelbrot plot with 1000 max iterations. I’m running this on a 2.9GHz dual core Windows XP SP3 with Python 2.6 (only 1 thread is used for all CPU tests). The timings:

- ‘gpu’ – 0.07 seconds

- ‘gpuarray’ – 3.45 seconds – 49* slower than GPU version

- ‘numpy’ – 43.4 seconds – 620* slower than GPU version

- ‘python’ – 1605.6 seconds – 22,937* slower than GPU version

- ‘python’ with psyco.full() – 1428.3 seconds – 20,404* slower than GPU version

By default mandelbrot.py forces single precision for all the math. Interestingly on my box if I let numpy default to numpy.complex128 (two double precision floating point numbers rather than numpy.complex64 with two single precision floats) then the Python result is faster:

- ‘numpy’ – 34.0 seconds (double precision)

- ‘python’ – 627 seconds (double precision) – 2.5* faster than the single precision version

The ‘22,937*’ figure is a little unfair in light of the 627 second result (which is 8,957* slower) but I wanted to use only single precision math for consistency and compatibility across all CUDA cards (the older cards can only do single precision math).

On my older dual core 2.66GHz machine with a 9800 GT I get:

- ‘gpu’ – 1.5 seconds

- ‘gpuarray’ – 7.1 seconds – 4.7* slower than GPU version

- ‘numpy’ – 51 seconds – 34* slower than GPU version

- ‘python’ – 1994.3 seconds – 1,329* slower than GPU version

If we compare the 0.07 seconds for the GTX 480 against the 1.5 seconds for the 9800 GT (albeit on different machines but the runtime is just measuring the GPU work) then the GTX 480 is 21* faster than the 9800 GT. That’s not a bad speed-up for a couple of years difference in architectures.

If you take a look at the source code you’ll see that the ‘gpu’ option uses a lump of C-like CUDA code, behind the scenes all pyCUDA code is converted into this C-like code and then down to PTX via their compiler. This is the way to go if you understand the memory model and you want to write very fast code.

The gpuarray option uses a numpy-like interface to pyCUDA which, behind the scenes, is converted into CUDA code. Because it is compiled from Python code the resulting CUDA code isn’t as efficient – the compiler can’t make the same assumptions about memory usage as I can make when hand-crafting CUDA code (at least – that’s my best understanding at present!).

The numpy version uses C-based math running on the CPU – generally it is regarded as being ‘pretty darned fast’. The python version uses numpy arrays with straight Python arithmetic, this makes it awfully slow. Psyco 2.0.0 makes it a bit faster.

Feedback and extensions are welcomed via the wiki!

If you want to get started then make sure you have a compatible CUDA card, get pyCUDA (installation instructions), compile pyCUDA (takes 30 minutes from scratch if you’re on a well-known system), try the examples and run mandelbrot.py. The mailing list is helpful.

It’d be nice to see some comparisons with PyPy, ShedSkin and other Python implementations. You’ll find links in my older ShedSkin post. It’ll also be interesting to tie this in to some of the A.I. projects in the A.I. Cookbook, I’ll have to ponder some of the problems that might be tackled.

Books:

The following two books will be useful if you’re new to CUDA. The first is very friendly, I’m still finding it very useful.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

4 Comments