A couple of weeks back I presented an Artificial Intelligence evening at FlashBrighton with John Montgomery and Emily Toop. The night covered optical character recognition, face detection, robots and some futurology. A video link should follow.

Optical Character Recognition to Read Plaques

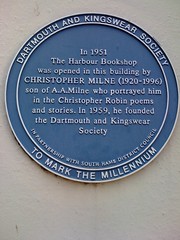

Recently I’ve been playing with OCR to read photos with text, a particular example I care about is extracting the text from English Heritage Plaques for the OpenPlaques project:

I gave an overview of the tesseract open source OCR tool (originally created by HP). Some of the notes I explained came from this tesseract OSCON paper. Some notes:

- tesseract ranked highly in international competitions for scanned-image text extraction

- it works better if you remove non-text regions (e.g. you isolate just the blue plaque in the above image) and threshold the image to a grey scale

- it runs very quickly – it’ll extract text in a fraction of a second so it will run on a mobile phone (iPhone ports exist)

To get people thinking about the task from the computer’s point of view I had everyone read out the text from this blurry photo. Treating the image as a computer would see it shows that you need several passes to learn which country is involved and to guess at some of the terms:

You can guess that the domain is music/theatre (which helps you to specialise the dictionary you’re using), based in the US (so you know that 1.25 is $1.25USD) and even though the time is hard to read it is bound to be 7.30PM (rather than 7.32 or 7.37) because events normally start on the hour or half hour. General knowledge about the domain greatly increases the chance that OCR can extract the correct text.

I talked about the forthcoming competition to write a Plaque-transcriber system, that project is close to starting and you can see demo Python source code in the AI Cookbook.

Optical Character Recognition Web Service and Translator iPhone Demo

To help make OCR a bit easier to use I’ve setup a simple website: http://ocr.aicookbook.com/. You call a URL with an image that’s on the web (I use flickr for my examples) and it returns a JSON string with the translated text. The website is a few lines of Python code created using the fabulous bottle.py.

The JSON also contains a French translation and mp3 links for text to speech, this shows how easy it is to make a visual-assist device for the hard of sight.

Emily built an iPhone demo based on this web service – you can a photograph of some text, it uploads the text to flickr, retrieves the JSON and then plays the mp3s and shows you the translated text.

OCR on videos

The final OCR demo shows a proof of concept that extracts keywords from ShowMeDo‘s screencast videos. The screencasts show programming in action – it is easy to extract frames, perform OCR and build up strong lists of keywords. These keywords can then be added back to the ShowMeDo video page to give Google more indexable content.

There’s a write-up of the early system here.

OCR futurology

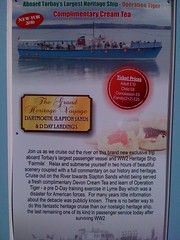

Text is all around us and mobile phones are everywhere. It strikes me that sooner or later we’ll be pointing our mobile phone at a poster like this and we’ll get extra information in return:

From the photo we can extract names of places, we also know the phone’s location so a WikiPedia geo-lookup will return relevant pages. Probably we can also extract dates and costs from posters and these can go into our calendar. I used tesseract on this image and extracted enough information to link to several WikiPedia pages with history and a map.

Face Detection for Privacy Invasion

John and I built a system for correlating gowalla check-ins with faces seen in images from the SkiffCam – the webcam that’s hosted in the Skiff co-working space. The goal was to show that we lose quite a lot of privacy without realising it – the SkiffCam has 29,000 images (1Gb of data) dating back over several years.

Using openCV’s face detection system I extracted thousands of faces. John retrieved all the gowalla check-ins based at the Skiff and built a web service that lets us correlate the faces with check-ins. We showed faces for many well-known Brightoners including Seb, Niqui, Paulo, Jon & Anna and Nat.

Given a persons face we could then train a face recogniser to see other occurrences of that person at the Skiff even if they’re not checking in with gowalla. We can also mine their twitter accounts for other identifying data like blogs and build a profile of where they go, who they know and what they talk about. This feels pretty invasive – all with open source tools and public data.

Emotion detection

Building on the face detector I next demonstrated the FaceL face labeling project from Colorado State Uni, built on pyVision. The tool works out of the box on a Mac – it can learn several faces or poses during a live demo. Most face recognisers only label the name of the person – the difference with FaceL is that it can recognise basic emotional states such as ‘happy’, ‘neutral’ and ‘sad’. This makes it really easy to work towards an emotion-detecting user interface.

During my demo I showed FaceL correctly recognising ‘happy’ and ‘sad’ on my face, then ‘left’ and ‘right’ head poses’, then ‘up’ and ‘down’ poses. I suspect with the up/down poses that it is really easy to build a nod-detecting interface!

Headroid2 – a Face Tracking Robot

Finally I demo’d Headroid2 – my face tracking robot (using the same openCV module as above) that uses an Arduino, a servo board, pySerial and a few lines of code to give the robot the ability to track faces, smile and frown:

Here’s a video of the earlier version (without the smiling face feedback):

For full details including build instructions see building a face tracking robot.

EuroPython

I’ll bring Headroid3 (this adds face-seeking behaviour) to EuroPython in a few weeks, hopefully I can find a few other A.I. folk and we can run some demos.

Reading material:

If you’re curious about A.I. then the following books will interest you:

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.