Last week I played with the Optical Character Recognition system tesseract applied to video data. The goal – extract keywords from the video frames so Google has useful text to index.

I chose to work with ShowMeDo‘s screencasts as many show programming in action – there’s great keyword information in these videos that can be exposed for Google to crawl. This builds on my recent OCR for plaques project.

I’ll blog in the future about the full system, this is a quick how-to if you want to try the system yourself.

First – get a video. I downloaded video 10370000.flv from Introducing numpy arrays (part 1 of 11).

Next – extract a frame. Using ffmpeg I extracted a frame at 240 seconds as a JPG:

ffmpeg -i 10370000.flv -y -f image2 -ss 240 -sameq -t 0.001 10370000_240.jpg

Tesseract needs TIF input files (not JPGs) so I used GIMP to convert to TIF.

Finally I applied tesseract to extract text:

tesseract 10370000_30.tif 10370000_30 -l eng

This yields:

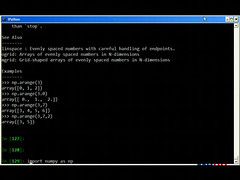

than rstupr . See Also linspate : Evenly spaced numbers with careful handling of endpoints. grid: Arrays of evenly spared numbers in Nrdxmensmns grid: Grid—shaped arrays of evenly spaced numbers in Nwiunensxnns Examples >>> np.arange(3) ¤rr¤y([¤. 1. 2]) >>> np4arange(3.B) array([ B., 1., 2.]) >>> np.arange(3,7) array([3, A, S, 6]) >>> np.arange(3,7,?) ·=rr··¤y<[3. 5]) III Ill

Obviously there’s some garbage in the above but there are also a lot of useful keywords!

To clean up the extraction I’ll be experimenting with:

- Using the original AVI video rather than the FLV (which contains compression artefacts which reduce the visual quality), the FLV is also watermarked with ShowMeDo’s logo which hurts some images

- Cleaning the image – perhaps applying some thresholding or highlighting to make the text stand out, possibly the green text is causing a problem in this image

- Training tesseract to read the terminal fonts commonly found in ShowMeDo videos

I tried four images for this test, in all cases useful text was extracted. I suspect that by rejecting short words (less than four characters) and using words that appear at least twice in the video then I’ll have a clean set of useful keywords.

Update – the blog for the A.I. Cookbook is now active, more A.I. and robot updates will occur there.

Ian is a Chief Interim Data Scientist via his Mor Consulting. Sign-up for Data Science tutorials in London and to hear about his data science thoughts and jobs. He lives in London, is walked by his high energy Springer Spaniel and is a consumer of fine coffees.

1 Comment